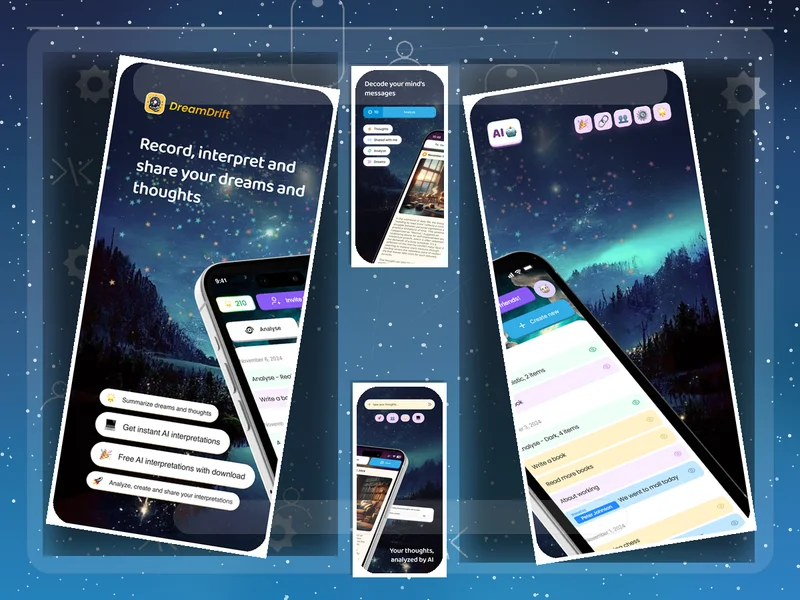

Consumer iOS App - DreamDrift

DreamDrift — shipping a fully-featured, AI-powered iOS app from idea to App Store

I like projects that move from sketch to shipped product fast, without skipping the parts that matter: clean architecture, strong UX, analytics you can trust, and production-grade operations. DreamDrift is exactly that kind of build. In a couple of months of part-time work, I took a zero-line-of-code idea and delivered a polished iOS app: native Swift frontend, Firebase-backed serverless infrastructure on Google Cloud, AI integrations for analysis and image generation, privacy-aware social features, push and in-app real-time notifications, analytics with BigQuery, and an App Store listing supported by Apple Search Ads and Google Ads.

Project description / intro

DreamDrift is a consumer iOS app for journaling dreams and thoughts, turning entries into insights with LLMs, and optionally sharing with a small circle of friends via private invites. The experience is intentionally personal and privacy-forward—think “close friends,” not public timelines. The feature set includes structured journaling, single-entry and multi-entry AI analysis, AI image generation from text, private friend linking and sharing, push notifications, and in-app real-time updates powered by Firestore listeners. Monetization started with one-off in-app purchases for AI credits, with a path toward a lightweight subscription for premium features.

From day one, the goal was to ship a complete product, not just an MVP demo: instrumented analytics, guardrails around AI usage and cost, a clean SwiftUI interface, and a backend I could extend confidently without adding operational drag.

Business use case

DreamDrift was built as a focused, startup-style product experiment: a fast, low-overhead way to test demand for an AI-enabled journaling and self-reflection tool with private social sharing. The business need was to validate whether modern LLMs could provide compelling, repeatable value to consumers in a domain—dreams and daily thoughts—where curiosity is high and the “insight loop” can be habit-forming. The primary goals were to ship quickly, prove engagement with real users, observe acquisition and retention through trustworthy analytics, and learn the cost profile of AI features at modest scale.

On the go-to-market side, I set up Apple Search Ads and Google Ads, wired attribution and funnels, and exported Firestore data to BigQuery for near-real-time insights across cohorts, content types, and AI usage patterns. The result was a clear feedback loop from marketing to product decisions.

Solution

Approach

I approached the build like I would any early-stage product: minimize undifferentiated heavy lifting, maximize the surface area devoted to user value, and keep every system observable and swappable. That led naturally to a serverless, Firebase-centric architecture on GCP, a native Swift/SwiftUI client, and a thin TypeScript/Cloud Functions layer that orchestrates identity, authorization, AI calls, and notifications.

How I arrived at the solution

Several paths were considered and pressure-tested for speed, maintainability, and cost:

-

Client: Native Swift/SwiftUI over cross-platform frameworks to get a crisp iOS experience, leverage modern Swift concurrency, and simplify App Store compliance and notifications.

-

Backend: Firebase over a self-managed Node/Go service on Cloud Run to remove ops from the critical path. Auth, Firestore, Functions, Analytics, and FCM interlock well and ship fast.

-

AI: Managed LLM APIs instead of self-hosting open-source models on rented GPUs. I evaluated OpenAI (ChatGPT, DALL·E), Anthropic, Meta Llama variants via providers, Google Gemini, and popular image models. For open-source hosting, DeepInfra was attractive on price/performance, but for the initial release I prioritized supplier agility with a provider-agnostic abstraction that lets me swap models without touching the app.

-

Analytics: Firebase Analytics and Firestore → BigQuery export for durable, queryable data with low setup overhead and strong tooling.

Tech stack

-

iOS: Swift + SwiftUI, modern concurrency, clean modular structure; StoreKit for IAP.

-

Identity & data: Firebase Auth; Firestore as the primary user/content store; Cloud Storage for AI-generated images.

-

Backend logic: Firebase Cloud Functions (TypeScript) invoked via Firebase client SDK; Firestore triggers for notification fan-out.

-

Notifications: FCM/APNs for push; Firestore listeners for in-app real-time updates (invites, acceptances, shared entries, new analyses).

-

AI providers: Pluggable LLM and image backends with a thin orchestration layer to manage prompts, batching, retries, and cost guards.

-

Analytics: Firebase Analytics events; Firestore → BigQuery export; GA4/Looker Studio for dashboards; ad attribution to close the loop.

-

Payments: Apple In-app purchases for AI credits, cleanly separated from core free functionality.

Architecture overview

At a glance, the app is a native iOS client talking to Firebase services. Firestore stores users, dreams, thoughts, share links, and AI results. Cloud Functions handle analysis requests, rate and quota checks, prompt templating, and provider calls. Push notifications are delivered via FCM/APNs; in-app updates use Firestore listeners. Analytics events flow to Firebase Analytics and Firestore data continuously lands in BigQuery for deeper analysis and cohorting.

Considered alternatives

-

Supabase/Postgres vs Firestore: I chose Firestore for built-in offline sync, granular security rules, and first-class real-time listeners powering in-app “notifications.” Supabase remains a good fit for SQL-heavy domains or when you need Postgres features; for this product’s evented, document-centric data, Firestore was faster to productive value.

-

Cloud Run or a custom microservice: Functions were sufficient and faster to ship. Cloud Run would be my next step for heavy AI pipelines, long-running jobs, or when I need containerized runtime control.

-

Self-hosting LLMs: The cost and complexity weren’t justified for an early consumer product. The provider-agnostic orchestration layer keeps that door open for the future.

Challenges

-

AI inside a native app, sanely: With no first-party AI tooling in Xcode at the time, I built a lightweight prompt-templating and provider-switching system server-side, then exposed clean, stable APIs to the client. This avoided shipping prompts in the app bundle and made experiments safe and reversible.

-

Quality, guardrails, and cost control: I added quotas and per-user credit accounting, prompt length checks, and exponential backoff with idempotent retries. Batching reduced per-request overhead for multi-entry analysis.

-

Real-time + push: Users get in-app updates instantly via Firestore listeners and receive push notifications when backgrounded. I tuned listeners to minimize reads and used compact documents to keep costs predictable.

-

App Store readiness: Clear privacy disclosures, opt-in sharing, and sensible defaults (private by default) meant a smooth review.

-

Tooling reality: On the client, I used a mix of ChatGPT and Anthropic for code generation, plus Copilot/Cody in the serverless repo. Without deep Xcode integrations, I created a lightweight workflow to round-trip source snippets through AI and then refactor for idiomatic Swift.

Scaling

While the initial target was learning-scale traffic, the design leans naturally into growth:

-

Data plane: Firestore handles high read/write throughput with proper document sizing and collection patterns. Security rules keep access tight without pushing logic into the client.

-

Compute plane: Cloud Functions scale to concurrency automatically; the provider layer makes it easy to isolate heavy AI work and—when needed—offload specific workloads to Cloud Run.

-

Images & assets: Cloud Storage trivially scales for generated images and media.

-

Cost and observability: Fine-grained Analytics events, BigQuery exports, and structured logs make it straightforward to track per-feature costs, measure CAC/LTV, and tune funnels without guessing.

Using AI to build the app

I used AI aggressively—and responsibly—to compress the build timeline. Roughly 60% of the code started its life as AI-generated scaffolding that I then edited, hardened, and refactored. On the server side, IDE integrations made this smooth; on iOS, I built a pragmatic workflow to paste, test, and iterate quickly. Across client and backend, this saved an estimated 70–75% of the development time versus writing every line by hand, without compromising architecture, quality, or UX polish. The key was knowing where AI drafts were appropriate (boilerplate, adapters, tests, UI scaffolds) and where human judgment mattered most (data modeling, error paths, concurrency edges, and the product’s voice).

Conclusion

DreamDrift met its goals: I shipped a complete, production-ready iOS application with a crisp native experience, private social features, dependable push and real-time updates, pluggable AI analysis and image generation, a sensible monetization path, and analytics wired end-to-end from events to BigQuery to ad platforms. Because the architecture is serverless and managed, the operational footprint is tiny—most energy goes into product iteration, not infrastructure babysitting. The AI-augmented development process delivered real efficiency gains, turning a multi-month build into a part-time sprint without sacrificing quality.

From a business standpoint, this architecture keeps costs elastic and predictable: you pay when users engage, not for idle servers. From an engineering standpoint, it’s a clean foundation that can scale, swap AI providers as the landscape evolves, and add features like richer collaboration or scheduled analysis jobs with minimal churn.

Most importantly, it demonstrates the kind of end-to-end execution I value: start with an ambitious but focused idea, choose technologies that remove friction, wire in measurement from day one, and deliver a product that real users can download, use, and share. That’s momentum—and momentum is how young products find their market.